Table of Contents

Demo installation overview

This document should serve as a quick overview of the layout of Mentat demonstration installation, so that newcomers can quickly start looking in the right directions.

News from 9 Aug 2017

- We have started to use automated build system, which takes care of testing, documentation generation and packaging of Mentat-ng system and related subprojects. It is available at https://alchemist.cesnet.cz/. It currently provides following features:

- Autogenerated project documentation for production and development code.

- Separate Debian package repository for production and development packages.

- File repository containing all relevant autogenerated files with SHA and GPG signatures.

- We have released new version of Mentat-ng system 0.4.0, which contains huge amount of changes and new features as well as huge boost in code documentation. It requires latest versions of pyzenkit (0.35) and pynspect (0.5) libraries, make sure that they are upgraded as well.

- The original Debian package repository hosted at homeproj.cesnet.cz server is not directly connected to our automated build system and will not be supported anymore. Please remove the

deb https://homeproj.cesnet.cz/apt/certs stable mainrepository from your apt configuration, it should be in file/etc/apt/sources.list.d/cesnet-certs.list. - Please switch to Debian package repository hosted at alchemist.cesnet.cz server. The step by step guide for this operation can be found at https://alchemist.cesnet.cz/ under the Debian repository tab of project Mentat.

- Do not remove the original

deb https://homeproj.cesnet.cz/apt/mentat squeeze mainin file/etc/apt/sources.list.d/mentat.list. It is still valid and contains old Perl-based version of Mentat system. It is thinning day by day, but currently it still contains reporting component and web interface.

Important facts

- For description of Mentat system installation process, please refer to installation guide.

- Project documentation is available at https://alchemist.cesnet.cz/mentat/doc/production/html/manual.html and it is regenerated automatically with each new version.

- If you have obtained the demo installation as a virtual server image, the SSH key you were given to access it does not have any password. Please change it ASAP.

- If you have obtained the demo installation as a virtual server image, please note, that there is a ufw firewall running and blocking all incoming connections to all ports except SSH. This is because access to web interface is configured with bogus passwordless administrator account and anyone can effectively access the data. Please adjust the firewall rules accordingly. The web interface is running on port HTTPS/443.

# Current firewall status ufw status # If you are sure, maybe just turn it off ufw disable

- Due to the current migration phase and a lot of undergoing development, the init script for Mentat system is currently not working and Mentat system will not start automatically after boot. You have to start it manually (see below) and use some monitoring or other tools to detect errors and keep it running.

- Documentation is scarce. Most of the modules have built-in help, but there is no documentation per se. There are only commented configuration files, unit tests and commented source code.

- Due to the migration phase from Perl-based implementation to Python-based implementation, modules are based on two different frameworks. Besides being written in different languages, these frameworks also use different configuration file format, they have slightly different command line options and there are other differences.

- Currently there are no indices created in the database by default. You have to create them based on your own requirements. If you want to create default list of indices, please make sure you have the most recent Mentat package installed on your system and then execute the database management utility (note, that the best performance is with still empty database):

# Make sure you are up to date apt-get update apt-get upgrade # Build default batch of indices mentat-dbmngr.py --command status --shell mentat-dbmngr.py --command init --shell mentat-dbmngr.py --command status --shell

- The web interface Hawat was build as internal tool. There are features, that are tightly coupled with our reporting feature and will not work on demo setup. Following features should absolutely work:

- Alerts - Tool for searching alert database

- Briefs - Statistical view of processed data for given time period

- Statitics - Low level processing statistics using RRD database tools

- Project is under heavy development. Check frequently for package updates via apt.

Directory layout

- /root/mentat/packages

For convenience all custom packages related to Mentat system installation are located inside this directory.

- /etc/mentat

Configuration files for Mentat modules.

- /var/mentat

Working directory for all Mentat modules. Pid files, runlogs, log files and other runtime data is located inside this directory.

- /etc/apache

Apache webserver is configured to serve Mentat`s web interface on port HTTPS/443. For convenience, there is also a redirection configured from HTTP/80.

- /etc/cron.d

Some Mentat modules are launched periodically via cron.

- /var/lib/mongodb

MongoDB database files.

System overview

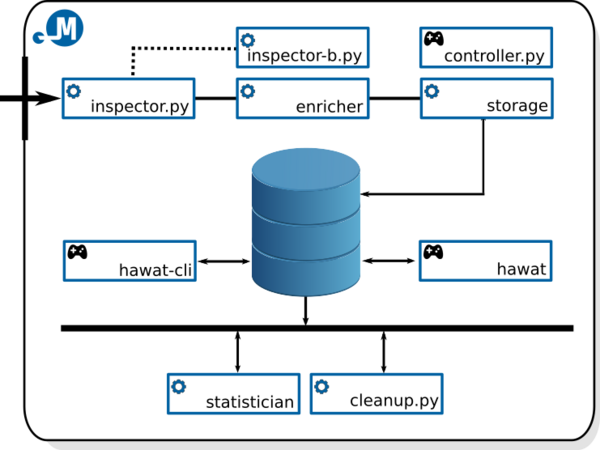

The whole Mentat sysyem is installed, however only some of the modules are enabled by default. The demo installation is configured into following topology/setup:

As you can see, there are two real-time message processing chains. The primary entry point is the queue directory of mentat-inspector.py module. Following after that is the mentat-enricher module and finally there is the mentat-storage module.

As an example of how it is done, there is another inspector instance mentat-inspector-b.py prepared. You may configure the first inspector to dispatch/duplicate certain messages to the second inspector instance for different processing. Configuration file for a inspector is highly commented, which currently serves as a documentation substitute.

From the post-processing modules, the mentat-statistician is enabled to provide statistical services, and the mentat-cleanup.py is enabled to provide data management and retention mechanism.

Communication between the real-time processing modules is really simple. Messages are passed between modules using filesystem based queue directories. Every real-time processing module has its own queue directory. Consider for example the system entry-point mentat-inspector.py:

root@mentat-demo:~# ll /var/mentat/spool/mentat-inspector.py/ total 25M drwxrwxr-x 7 mentat mentat 4,0K Jan 13 13:19 . drwxrwxr-x 15 mentat mentat 4,0K Mar 22 13:02 .. drwxrwxr-x 2 mentat mentat 12M Mar 22 13:17 errors drwxrwxr-x 2 mentat mentat 13M Mar 23 15:23 incoming drwxrwxr-x 2 mentat mentat 4,0K Mar 23 15:23 pending drwxrwxr-x 2 mentat mentat 1,3M Jan 13 13:27 tmp

As you can see, there following subdirectories:

- incoming - Main queue directory. New messages must be atomically moved into this subdirectory to be processed. Do not touch the message files after the move, they now belong to the module.

- pending - Internal working queue directory. Messages, that are currently being processed. Only for internal use, never change or use in any way any file inside this directory.

- tmp - Temporary working directory, can be used for atomical move from different partition.

- errors - Storage for messages, that could not be processed and had to be put aside for further manual inspection.

Conclusion: Inserting new message into Mentat`s processing chain is just a simple matter of atomically moving the message file into appropriate directory and the module will pick it up and process it.

Quickstart

- First thing you can do is check the status of all Mentat system components:

# The 'status' command is default, so this is a short form mentat-controller.py # And the same, but more verbose mentat-controller.py --command status # Or even mentat-controller.py --command=status

- All Mentat modules display help when executed with proper option:

# Most interesting Mentat modules for the beginning: mentat-controller.py --help mentat-inspector.py --help mentat-enricher --help mentat-storage --help mentat-cleanup.py --help mentat-ideagen.py --help mentat-statistician --help ...

- Let`s start things up:

mentat-controller.py --command start

- At this point all required Mentat modules should be running. You can verify that by listing processes, tailing log files, or using status command introduced before.

- Now it is time to generate some dummy test data:

mentat-ideagen.py --count 1000

- After some time messages should get to the database and you may view them via web interface Hawat. Or you can view the bumber of messages in database using the hawat-cli interface:

hawat-cli db view count

Receiving events from Warden

If you want to receive alerts from Warden system and process them with Mentat, take following steps:

- According to the documentation install Warden client library.

- The easiest way to receive alerts from Warden and feed them into Mentat is using warden_filer. Please register and configure it according to the documentation.

- The key configuration option in configuration file

/etc/warden_filer.cfg(default file name) isdirinreceiversection. Set that to point to message queue of mentat-inspector.py, which is the entry-point of message processing chain on default demo installation:

...

"receiver": {

"dir": "/var/mentat/spool/mentat-inspector.py",

...

}

...

After starting the receiving warden_filer daemon you should see IDEA messages appearing in /var/mentat/spool/mentat-inspector.py/incoming directory.

Real-world performance statistics

Following information may serve as a rough reference regarding consumption of system resources and overall system performance. These statistics were gathered on our production installation of Mentat system. We are utilizing following system configuration:

CPU: 2 x Intel(R) Xeon(R) E5-2650, 2.00GHz RAM: 192GB HDD: 1,1 TB, 15k, SAS OS: Debian GNU/Linux 8.7 (Jessie) DB: MongoDB 3.4.3, WiredTiger engine

We are registering following performance:

- System load: 4.29 (15 minute average, last month), 3.61 (15 minute average, last year)

- Database: 110 GB data size, 30 GB index size, 1.75 KB average object size, 160 mil. alerts, 5 mil. new alerts daily, 4 weeks of full alert history, 6 months of CESNET2 network related alert history

Currently, we are able to archive maximal throughput of 10 mil. alerts per day (empiric testing). However, this value is limited by performance of currently used mentat-storage daemon, which is implemented using legacy Perl-based framework. We are working on replacement, which should provide notable performance increase.

Source code

- https://homeproj.cesnet.cz/git/mentat-ng.git/ - main project repository

- https://homeproj.cesnet.cz/git/mentat.git/ - repository containing legacy implementation of Mentat system based on Perl. It is still required and contains part of the production code. However it is thinning day by day as more and more features are being reimplemented in Python and moved into main project repository.

Troubleshooting

Images can not be seen in web interface

If you cannot see any images in Statistics section of web interface, please execute manually following commands to make sure, that the resources are correctly linked to root directory of the web application:

mkdir -p /var/mentat/www/hawat/root/data ln -s /var/mentat/reports/briefer /var/mentat/www/hawat/root/data/briefer ln -s /var/mentat/charts /var/mentat/www/hawat/root/data/charts ln -s /var/mentat/reports/dashboard /var/mentat/www/hawat/root/data/dashboard ln -s /var/mentat/reports/reporter /var/mentat/www/hawat/root/data/reporter ln -s /var/mentat/rrds /var/mentat/www/hawat/root/data/rrds ln -s /var/mentat/reports/statistician /var/mentat/www/hawat/root/data/statistician

Web interface does not start

Please execute following command as root:

root@mentat-demo:~# grep "mail_test" /var/log/apache2/error.log Couldn't instantiate component "Hawat::Model::Reports", "Attribute (mail_test) is required at /usr/local/lib/x86_64-linux-gnu/perl/5.20.2/Moose/Object.pm line 24

If the output is similar to the example on previous snippet, the problem lies in missing configuration value. This might have happened after upgrade to certain version of Mentat system. In this case the solution is to provide any value in main Hawat configuration file /var/mentat/www/hawat/hawat.json:

...

"Model::Reports": {

...

# Add additional configuration value called 'mail_test'

"mail_test" : "email@domain.org",

...

},

"Model::Briefs": {

...

# Add additional configuration value called 'mail_test'

"mail_test" : "email@domain.org",

...

},

...

If you do not want to go through the whole configuration file, another option is to apply following patch:

--- /var/mentat/www/hawat/hawat.json.orig 2017-03-31 16:52:09.869829279 +0200 +++ /var/mentat/www/hawat/hawat.json 2017-03-30 14:33:58.959615672 +0200 @@ -142,6 +142,7 @@ "mail_from" : "CESNET-CERTS Reporting <reporting@cesnet.cz>", "mail_devel" : "reporter-test@mentat-demo.cesnet.cz", "mail_admin" : "mentat-admin@mentat-demo.cesnet.cz", + "mail_test" : "reporter-test@mentat-demo.cesnet.cz", "mail_bcc" : "mentat-admin@mentat-demo.cesnet.cz", "reply_to" : "abuse@cesnet.cz", "test_mode": 0, @@ -157,6 +158,7 @@ "mail_from" : "CESNET-CERTS Reporting <reporting@cesnet.cz>", "mail_devel" : "reporter-test@mentat-demo.cesnet.cz", "mail_admin" : "mentat-admin@mentat-demo.cesnet.cz", + "mail_test" : "reporter-test@mentat-demo.cesnet.cz", "mail_bcc" : "mentat-admin@mentat-demo.cesnet.cz", "reply_to" : "abuse@cesnet.cz", "test_mode": 0,

Save it to some local file and then apply the patch using following command:

patch /var/mentat/www/hawat/hawat.json /path/to/your/patch/file.patch

We are working on fixing this issue at the package level, so that this does not happen again.